Case Study: Ethics in AI/ML

NumFOCUS tools enable accountability and ethics within AI and Machine Learning

Alejandro Saucedo is Chief Scientist at The Institute for Ethical AI & Machine Learning, a UK-based research center that carries out cross-functional research into responsible machine learning systems. Saucedo is what he calls “a practitioner”—he has been a software developer for 10 years and before joining the Institute was leading a machine learning department focused on automation of regulatory compliance, working directly with lawyers to extract their domain expertise into features and models.

NumFOCUS tools are being used to create a future in which AI and Machine Learning systems are chosen and implemented responsibly and ethically.

Tools and Processes to Empower Ethical Practitioners

The Institute for Ethical AI & Machine Learning was founded primarily by data science, software engineering, and policy practitioners in January 2018. Its founding purpose was to address the challenge that although at the time there were many high-level conversations about AI and ethics happening, “what nobody could really understand is what is the infrastructure required and the processes that need to be in place” to ensure that ML/AI avoids doing harm to humans.

even people with the right moral commitments may still find themselves in an immoral position if they don’t know how to execute properly

The Institute was developed with a focus on providing tools to empower practitioners—not just data scientists in the tech industry, but a variety of industries, including construction, infrastructure, transport, finance, insurance, and more. According to Saucedo, the high-level mission of the Institute is to “empower people that have the right intentions and restrict the ones that don’t.” This is because even people with the right moral commitments may still find themselves in an immoral position if they don’t know how to execute properly. This founding philosophy is what led the Institute to focus on developing “not just tools, but tools plus process.”

The tools and process developed by The Institute for Ethical AI & Machine Learning are a result of collaborations between programmers, data scientists, ethicists, philosophers, and psychologists, as well as managers with no technical expertise. “It’s quite interesting to see the amount of traction this has been able to get beyond the data science community,” says Saucedo.

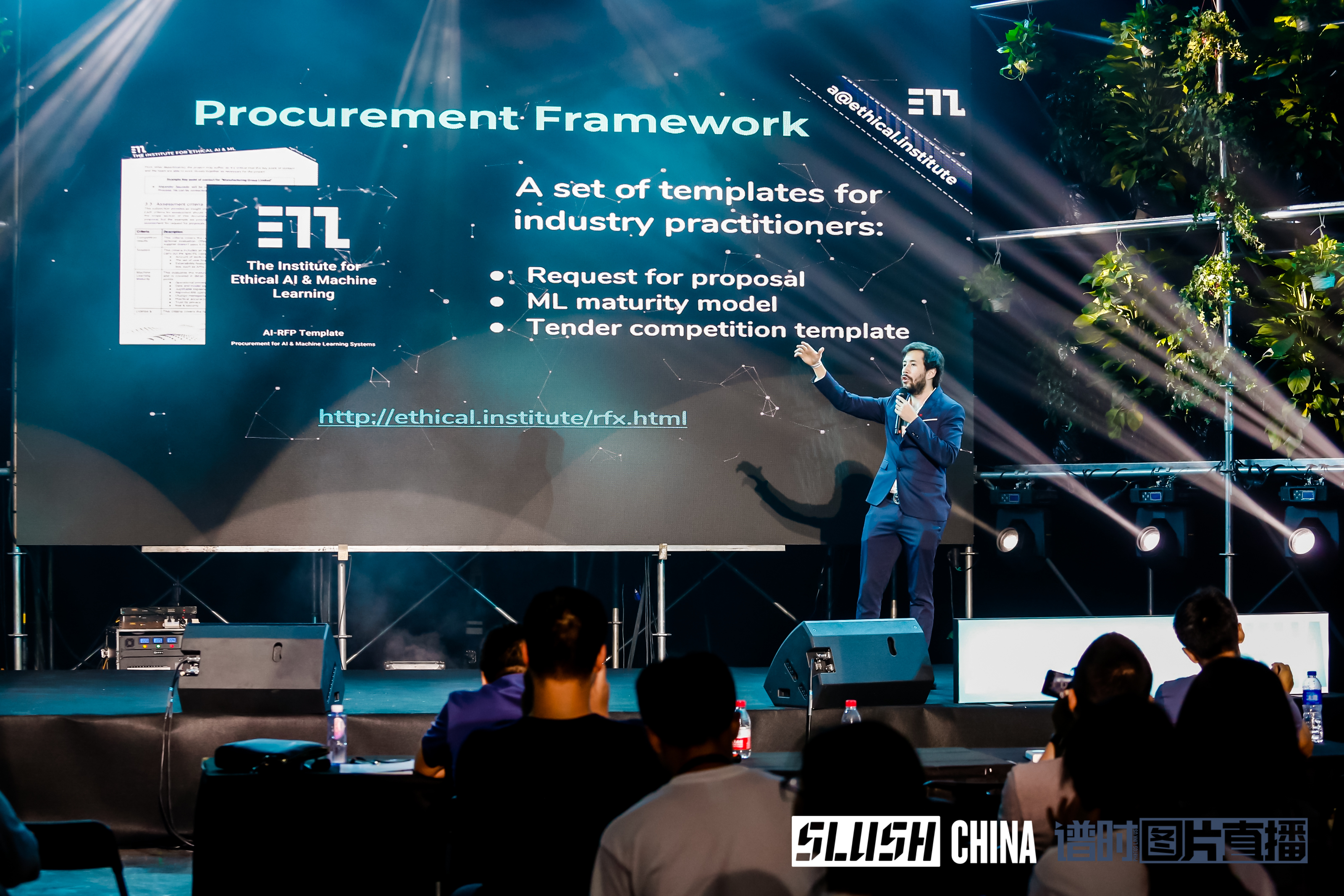

The procurement framework, for example, is a set of templates that allows procurement managers to ask questions of potential suppliers regarding their “machine learning maturity level,” and ensures practitioners “actually have what their Powerpoint says they have.” In more practical terms, the machine learning maturity model the Institute created consists of questions analogous to that of a security questionnaire, which ask questions such as: do you have the capabilities to use statistical metrics beyond accuracy? Can you version models? Monitor the metrics of your models?

Saucedo clarifies, “Of course, we don’t expect all suppliers to have absolutely all the points required by the machine learning maturity model, but it offers a way to assess objectively the required capabilities that suppliers have internally, together with the commitment for some of these to be in their roadmap.”

Tools and Infrastructure for Compliance with Ethical Standards

For the past two years, the Institute has been working on an Algorithmic Bias Consideration Standard with the IEEE, and the tooling and processes being developed by the Institute are designed to assist in showing compliance with this standard.

One of their contributions from the technical side is a proposal to extend the existing CRISP DM model. They propose that data analysis, model evaluation, and production monitoring should be key steps that involve not just the data scientists doing the processing, but also the right domain experts who can provide “the green flag, expert knowledge, or whatever is required.” According to Saucedo, because launching an at-scale application reaching millions of people will require a higher level of scrutiny than merely deploying a prototype, it’s important to have the right risk assessment up front.

“This has allowed for a higher level of interpretability and governance across data science projects, which is critical as this ensures accountability is in the right place, and makes sure that, for example, a junior data scientist doesn’t get unfairly blamed for an accident that may arise in the lifecycle of a project.”

From Procurement Framework to Explainability Tool

The procurement framework evolved into an XAI, an explainability tool that aims to implement the framework, which is built entirely upon NumFOCUS projects. The Institute for Ethical AI & Machine Learning chose NumFOCUS tools because they are the most commonly used. “We decided to use pandas as the core backbone of the library with NumPy, as well. […] We use Matplotlib primarily for the actual visualizations, [… and] we are hosting everything on the Jupyter notebooks.”

The Institute has been creating case studies with this explainability toolset around algorithmic bias, managing offensive language in social media, and other general automations. One example implemented in the toolset looks at a data set of loan applications and highlights ways of identifying and removing undesirable biases.

“What then you can do with this library is you can ask whether there is, for example, imbalances.” For instance, you could look at the representation of gender in the data—in the example, there are twice as many male loan applicants as female. Digging further into the imbalance around approved loans, you can see that the actual ratio of approved loans for female applicants is significantly lower. So if you had used this data to train your model, your model would be obviously and significantly biased against female loan applicants, regardless of their actual history or creditworthiness. Saucedo concludes, “What I show later on is that it [the algorithm] actually just learns to reject all loans from women, right, because that’s what gets it the highest accuracy.”

“[the algorithm] actually just learns to reject all loans from women”

Following the Institute’s process, after you clean your data but before you define your features, you should analyze and document potential biases and imbalances in the data set. Rather than attempt to remove all biases (which Saucedo says is impossible), the goal is to identify the undesirable biases in the data. Then the next step is model evaluation, or the question of what statistical metrics you want to use to evaluate it and what are the imbalances you have. For problems like class imbalances, the explainability tool allows for upsampling and downsampling, which enables the user to compensate for under-sampled groups in the data (when more data is not able to be collected for this purpose). It also allows you to look at correlations between things like names and gender. These features empower the user to build a train/test split for a data set that is “balanced.”

Per Saucedo, “In the process we propose, the objective is to ask the questions of: what is the impact of a false positive? Is that higher than the impact of a false negative?” Then, based on that you can decide what to use as your core metric—for example, precision or recall.

Ultimately, the goal of this type of assessment and approach is to design business processes that acknowledge and attempt to remedy undesirable biases in the algorithmic model. Looking at the example data: “Instead of having the model processing every single application, you could actually have the model only processing applications with 80% and up on confidence for your model, and everything below you actually could have a human in the loop.” (See Saucedo’s talk on this subject at PyData London 2019.)

“the goal […] is to design business processes that acknowledge and attempt to remedy undesirable biases in the algorithmic model”

“We decided to use pandas as the core backbone of the library with NumPy, as well”

“We use Matplotlib primarily for the actual visualizations”

“We are hosting everything on Jupyter notebooks”

pandas

NumPy

Jupyter

Matplotlib

The Power of NumFOCUS Tools

Asked what motivated their choice of NumFOCUS open source projects as the backbone of the explainability tool, Saucedo said, “What brings value is the very careful selection of tools in the order proposed.” While many factors motivated their choice, “number one is the adoption” — the fact that such a large percent of people currently involved in the development of data science tools are using NumFOCUS projects, particularly pandas and NumPy. NumFOCUS tools meet where the Institute is most closely focused: at the intersection of programming, data science, and industry. “Not only they are very powerful data science tools, but they are also very powerful programming production tools,” he said. Because of this, in addition to gathering insights from your dataset, when you manage to build a useful model, you can wrap it in a product and make it usable and available.

“Not only they are very powerful data science tools, but they are also very powerful programming production tools”

Partly because the code is easy to understand and has so few dependencies, the Institute is able to use NumFOCUS tools for a wide variety of ethical machine learning and artificial intelligence applications. “NumFOCUS tools have played a key role in us being able to not only create these open source frameworks, but also in performing the analyses that have enabled us to gather the insights we now hold in topics around algorithmic bias, privacy, etc.”